When people hear or see something that is not there, we call it a hallucination.

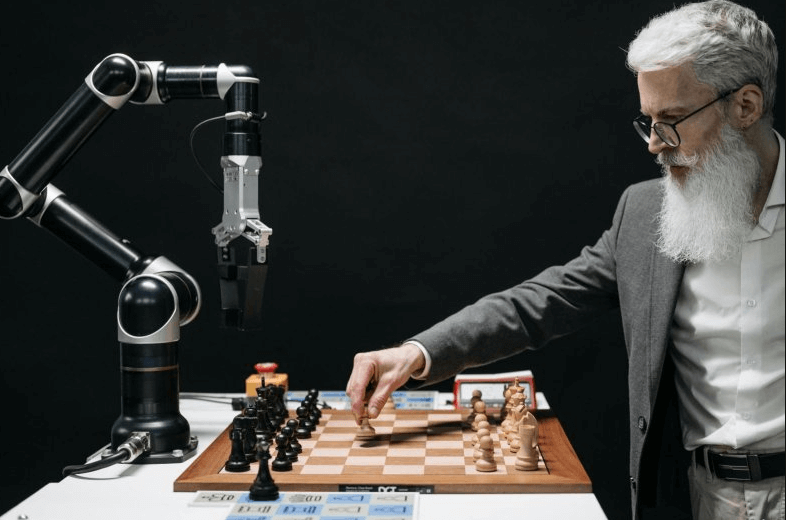

But did you know that computer systems based on artificial intelligence are capable of something like that? While they do not dream or see visions, AI technologies can generate false or misleading results that look real. In the tech world, such activity is known as an AI hallucination.

From chatbots like ChatGPT to image generators like DALL-E and even autonomous vehicles, AI hallucinations are rapidly becoming an issue—a one with real-world repercussions.

What Is an AI Hallucination, Precisely?

AI hallucinations are experienced when an algorithm creates information which is plausible-sounding but either untrue or unrelated.

It might manifest in numerous different ways according to the system. A chatbot, for example, could claim with full certainty a mention of a nonexistent paper, and an image identification system could mistakenly identify what a picture contains.

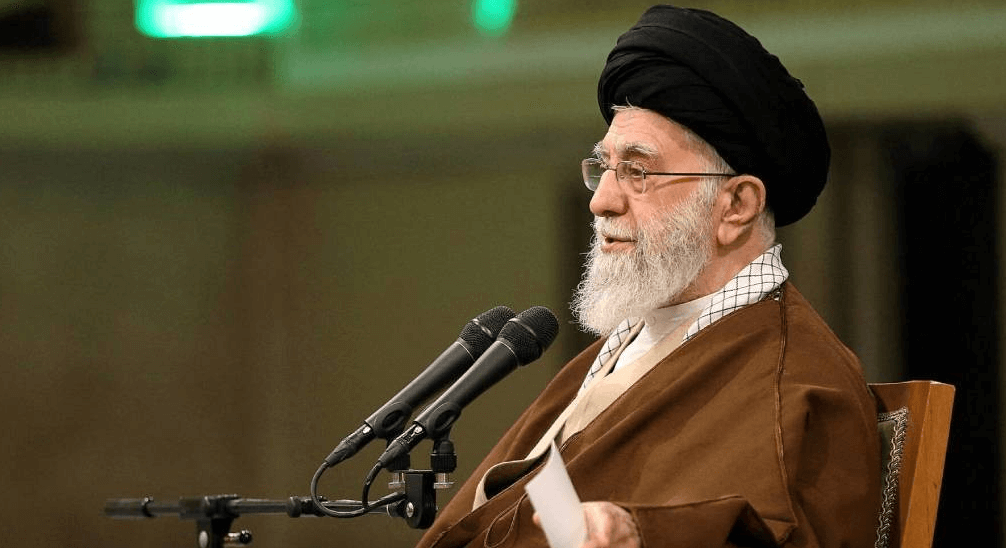

This is not just a hypothetical problem. In 2023, a New York attorney drafted a legal brief with the assistance of ChatGPT—just so the court would discover that the AI had manufactured one of the cited cases altogether. That is not just embarrassing—it can alter real outcomes.

When Hallucinations Become Harmful

Sometimes, AI hallucinations are innocuous enough—like a chatbot giving an inaccurate trivia answer. But in more critical areas like law, medicine, and transportation, the consequences can be dire or even lethal.

Think of an autonomous vehicle that misidentifies something on the road or a health insurance algorithm that mistakenly deems a patient ineligible for treatment. Or AI speech-to-text technology that inserts the wrong words into court or medical documents. In mission-critical situations, tiny errors can have gigantic effects.

Why Do AI Systems Hallucinate?

AI models are trained on huge datasets—text, images, or audio—where they learn patterns. When asked to respond, they use those patterns to generate new content.

But when the system is not aware of something entirely or has partial training data, it may “fill in the blanks” with assumptions. That’s when hallucinations happen.

For example, AI models that are trained on pictures of dogs have been shown to classify a blueberry muffin as a chihuahua. It’s funny, but it indicates how sensitive pattern recognition can be when context is missing or incorrect.

Creativity vs. Hallucination

You have to separate creative products from hallucinations. Surprises are fine when you instruct an AI to produce a short story or make up a dreamlike picture of computer art. However, when you ask for facts, directions, or legal references, hallucinations are undesirable and problems.

The primary difference is one of intent. Creativity is great for fiction and art. But when accuracy and trust are involved, hallucinations can wreck confidence—and even result in harm.

Can We Prevent AI Hallucinations?

Technology companies are trying to solve the issue by enhancing training data, implementing stricter model guidelines, and making artificial intelligence systems explainable. But no solution is perfect—hallucinations are a continuing problem.

That is why users must be cautious. Always check AI content, especially important information. Utilize credible sources, consult experts, and remember that even the wisest machines are prone to making mistakes.

Final Thoughts

As artificial intelligence solutions continue to become further embedded in our lives—from chatbots and automated customer service interfaces to self-driving cars—being aware of their limitations has never been more important. AI is wonderful and wonderful all, but flawless, no.

Recognizing where and when hallucinations happen is the start of learning how to use these tools safely, ethically, and sensibly.

Leave a Reply